📈 #23 Part II - The OR revolution: 3 AI breakthroughs that are reshaping Operations Research

Discover how AI is improving OR techniques and solving tough problems

Finally, Sunday has arrived, right? I hope the hangover allows you to read today's post, as it's quite dense 😉

In this edition of Feasible, I'm going to tell you:

How Deep Reinforcement Learning can be used to solve optimization problems.

What can be done to effectively combine Machine Learning techniques with Operations Research techniques.

Generative AI can help you solve optimization problems step by step, similar to heuristics, without requiring knowledge of mathematics or complex algorithms.

You know, this is the second part of a trilogy.

In the first part, I talked about how Quantum Computing is driving the world of optimization, and it actually sparked more debate on LinkedIn than I anticipated.

So, let's get started with this one, shall we?

15 years ago:

Penny, everything is better with Bluetooth. — Sheldon Cooper.

If The Big Bang Theory writers had to write the script now, I can assure you it would say:

Penny, everything is better with Artificial Intelligence. — Sheldon Cooper.

And it's true, AI has flooded our lives. Even my cousin used ChatGPT to make his speech at my wedding! (he mentioned it in the speech itself)

As expected, AI also contributes to - and/or benefits from - Operations Research. There are at least three areas with a clear connection between the two fields.

🤖 Deep Reinforcement Learning in decision-making problems

Deep Reinforcement Learning (DRL) is a neural network training technique that involves:

An agent making decisions

An environment with which the agent interacts

The agent has a set of possible actions on the environment, makes a decision, and executes it, while the environment changes its state and provides feedback to the agent in the form of a reward, which can be either positive or negative. The goal of the agent is to find the optimal action policy that maximizes the accumulated reward.

DRL can be used as a technique to solve combinatorial optimization problems. In these types of problems, the goal is to optimize decision-making, similar to what happens in DRL.

This approach has its advantages and disadvantages, but in general, it can be said to be a powerful technique when dealing with:

A sequential decision problem

Where decisions affect each other

And in a stochastic environment, i.e., where random events can occur

In other words, decisions need to be made while predicting how the actions taken in the interacting environment will affect it.

This is practically demonstrated in the famous video of an agent training to complete the Pokémon game:

However, if you find the Pokémon example less serious, you can always read the article where Yoshua Bengio himself proposed using DRL to solve the traveling salesman problem (TSP), or watch one of the biggest workshops I remember from 2021 on the use of Deep Learning to solve combinatorial optimization problems, with the participation of prestigious universities from around the world such as MIT, Carnegie Mellon, Georgia Tech, and Princeton, or cutting-edge companies like Google AI, IBM, and DeepMind.

Additionally, if you really want to delve into this area, I recommend visiting Warren Powell's page on Sequential Decision Analytics.

🔁 Use of Machine Learning techniques in combination with OR

I won't reproduce word for word what Anand Subramanian and Holger Teichgraeber wrote in this great article about the combination of OR and ML, but I'll give you key points to understand this well.

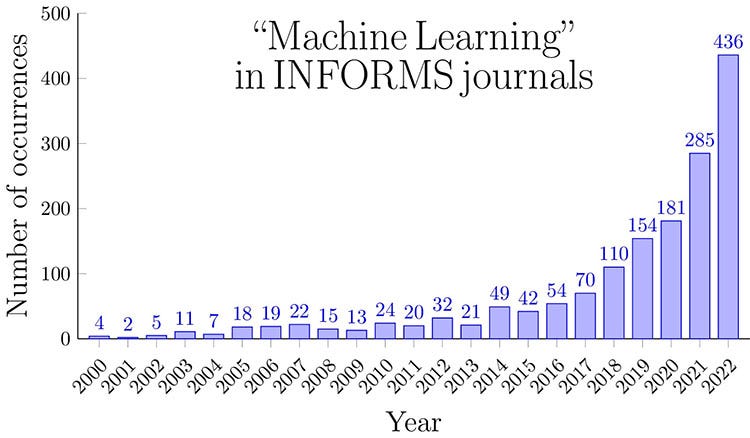

First, there is a growing demand for articles that combine both worlds. There is an exponential growth in the number of times Machine Learning is mentioned in INFORMS journals:

There may be many reasons, but the fact is that there are several effective ways to combine these techniques:

Predict to optimize

Since more and more data is used every day to make decisions based on them, and more and more companies adopt this strategy as something native and core to their business, one of the simplest ways to integrate both worlds is to do it in two steps:

Make a prediction about your problem.

Use that prediction as input for the optimization problem.

For example, if you wanted to solve the traveling salesman problem (TSP, you know), you could first make a prediction about the times between different points and use that information to solve the optimization problem more realistically. It's better to solve that problem with data closer to reality than with your calculations that take into account average distances and speeds.

This approach has traditionally been called predict-then-optimize, and it gave way to an improved version called Smart predict-then-optimize. Despite the benefits of this approach, other approaches like predict-and-optimize (predict and optimize) have emerged, aiming to integrate predictive models into optimization algorithms by considering more information about the probability functions of variables, enriching the resolution of optimization problems.

Moreover, there are already tools in the market, such as InsideOpt, that facilitate the management of these types of models.

Use of optimization in ML models

In general, one of the most widespread uses is to use optimization models to make ML models more explainable, although it is still an expanding field. There are also many heuristics among ML models.

A clear example of the latter is precisely the k-means algorithm, which is directly solved as an optimization problem because, surprise, it is an optimization problem.

Use of ML in optimization solvers

A solver is software that uses state-of-the-art techniques to solve optimization problems as efficiently as possible. These techniques are tools that the software has. Therefore, the more techniques, the more tools, and the more tools, the easier it is to solve these problems.

ML models have appeared here as models that help in different areas, such as:

In quadratic optimization problems, where the relationship between variables is non-linear, they help the solver decide when to make that relationship linear.

In combinatorial optimization problems, where branching and pruning techniques are usually used, they help the solver decide which branch is more promising to explore or prune.

In problems where there are many examples from which to learn, where relationships between the input parameterization of the optimization model and the best solutions to the problem could be extracted, traditional solvers could even be replaced by this strategy, especially if decisions have to be made almost instantly, and the problem is very complex.

All these are very good examples of how Operations Research can be combined with Machine Learning techniques.

In general, you can see them as ways to provide feedback between AI and Operations Research, and vice versa. Just as it makes people like me, who have focused on Operations Research, look at AI very favorably and want to learn more and more, the opposite is also true: people who have focused on AI are increasingly interested in Operations Research.

✍🏻 Generative AI to solve optimization problems... Without knowing mathematics or complex algorithms

It's here, and it's here to stay. We could replace Matrix with Generative AI, and the famous line from the movie would fit well today: "Generative AI is all around us. It's everywhere. Even now, in this very room."

So much so that we have large language models (LLMs) to generate text, images, audio, video. And practically in any of their combinations. From text to text, from text to image, from image to video, from image to audio...

For now, the one that interests me the most is the one that generates text, I won't lie to you.

I don't know if it can reason like an ant or a 5-year-old child, and I'm not going to get into that right now.

What I do know is that it can help you think differently and eventually solve optimization problems. I'm not saying it; researchers are saying it.

At the beginning of 2023, the latest version, and probably the one I read, of Chain of Thought was uploaded, proposing a way to interact with these AIs so that step by step, they provide better answers. And there I thought it resembled what a heuristic does when solving optimization problems. Step by step, it gives you a better solution to your problem.

Then, between late August and early September, three more articles were released, although one of them especially touched my soul. I'll list them in the order I read them:

Large language models as optimizers, by DeepMind (the one that touched my soul), proposing Optimization by Prompting, or a way to optimize with prompts - the texts we use to interact with AI. They directly talk about mathematical optimization and how to use this technique to solve the TSP and a linear regression problem.

Diagnosing infeasible optimization problems with large language models, by researchers from Purdue University (in Indiana, USA), proposing OptiChat, a tool that connects ChatGPT with Pyomo and Gurobi so that a non-expert user can create mathematical models to solve an optimization problem.

Large language models for science: a study on P vs NP, by Microsoft and different Chinese universities, proposing the use of LLMs to reason and reach conclusions about one of the Millennium Prize Problems, proposed by the Clay Mathematics Institute (where they give you 1 million dollars if you solve it). It's not exactly a case of an optimization problem per se, but algorithmic complexity theory is intimately related.

A few days later (mind you, this world moves very quickly), Microsoft released an agent based on LLMs that helps you solve optimization problems related to the supply chain. OptiGuide helps you reason about possible scenarios in which to understand what to do if the cost of a supplier for your product increases, for example. This AI connects with Gurobi, creating code for this famous solver and solving the underlying optimization problem.

In early October, DeepMind came back with another article proposing using other techniques to improve the quality of responses given by an AI.

And finally, DeepMind also published an article in Nature (one of the most prestigious scientific journals in the world) last December proposing FunSearch, an AI that generates code to solve an optimization problem, the Online Bin-Packing Problem. If you want to read something lighter than the article in Nature, they explain it well on their blog. It's not just that we have a better solution than current ones for solving that problem, but it generates code, and you can then adapt it to your circumstances. In other words, you don't have to trust what an AI tells you; if you want to modify the solutions it gives you, you just have to tweak the code.

As you can see, the world of generative AI is moving rapidly to try to solve optimization problems. These are very interesting problems, with very practical applications in many areas and are difficult to solve. If an AI helps us do it more easily, why not explore this area as well?

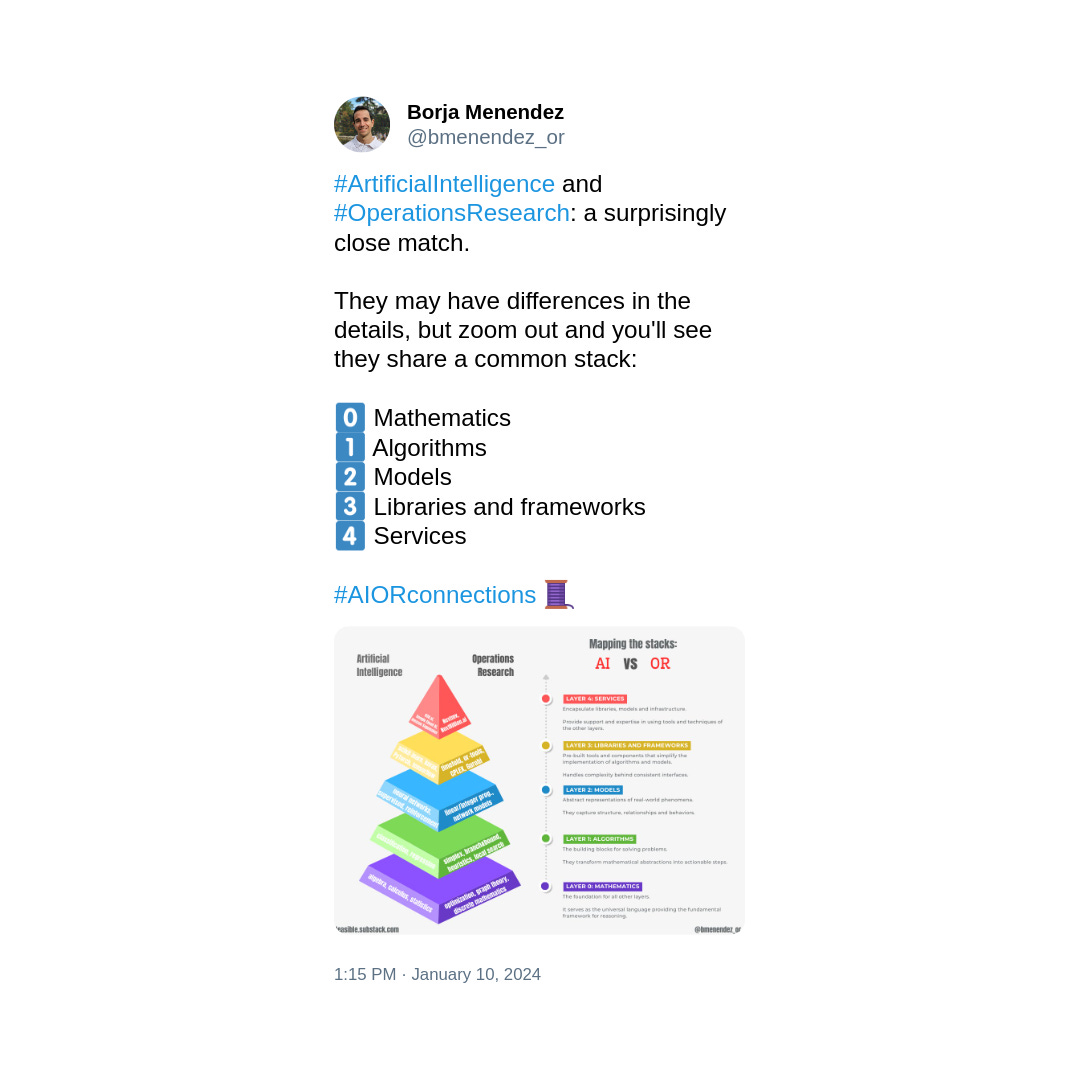

Be aware that in this post, we have seen a lot of connections between various areas of Artificial Intelligence and Operations Research.

From my point of view, they even share the same vision of the world to solve problems: they are based on mathematics, which serve to develop algorithms, which serve to develop models, which serve to develop libraries and frameworks, which serve to develop services... But I'll tell you more about this in this post on X/Twitter if you agree:

See you next week with the conclusions of these first two parts of the trilogy.

Until then,

Borja.