📈 #22 Part I - The OR revolution: the promise of Quantum Computing

The future of optimization starts now.

Happy New Year! 🎉

I truly hope you had a wonderful time with family and friends during these days.

It's important to disconnect, which is why I haven't written to you in a while. I spent a few days between Alicante and Elche, and honestly, it was fantastic for me.

So here I am again to give you a dose of content on Operations Research.

One thing I've noticed lately is a renewed interest in applying Operations Research techniques in many businesses. Two main reasons drive this interest: Quantum Computing and AI.

I wanted to explain why I see it this way in as much detail as possible, and it turned out to be quite a long read. I didn't want you to block out half an hour, so I've divided it into three different parts. In this first part, I'll tell you everything related to Quantum Computing from the Operations Research perspective:

The need for quantum computers

The existence of more efficient quantum algorithms than classical ones

How the scientific community and private companies share a common goal

The second part of this trilogy will focus on the connection between Artificial Intelligence and Operations Research, while in the third and final part, I'll share my visions and conclusions regarding the first two parts.

Let's go for it!

In 1965, Gordon E. Moore - co-founder of Intel, one of the most important microprocessor companies in the world, if not the most important - established a law stating that the number of transistors on microprocessors would double every 2 years. It's something he observed himself, and it's an empirical law that has been proven in practice since then.

Since the size of microprocessors has remained constant or even become smaller, for this law to hold, transistors must become smaller and smaller.

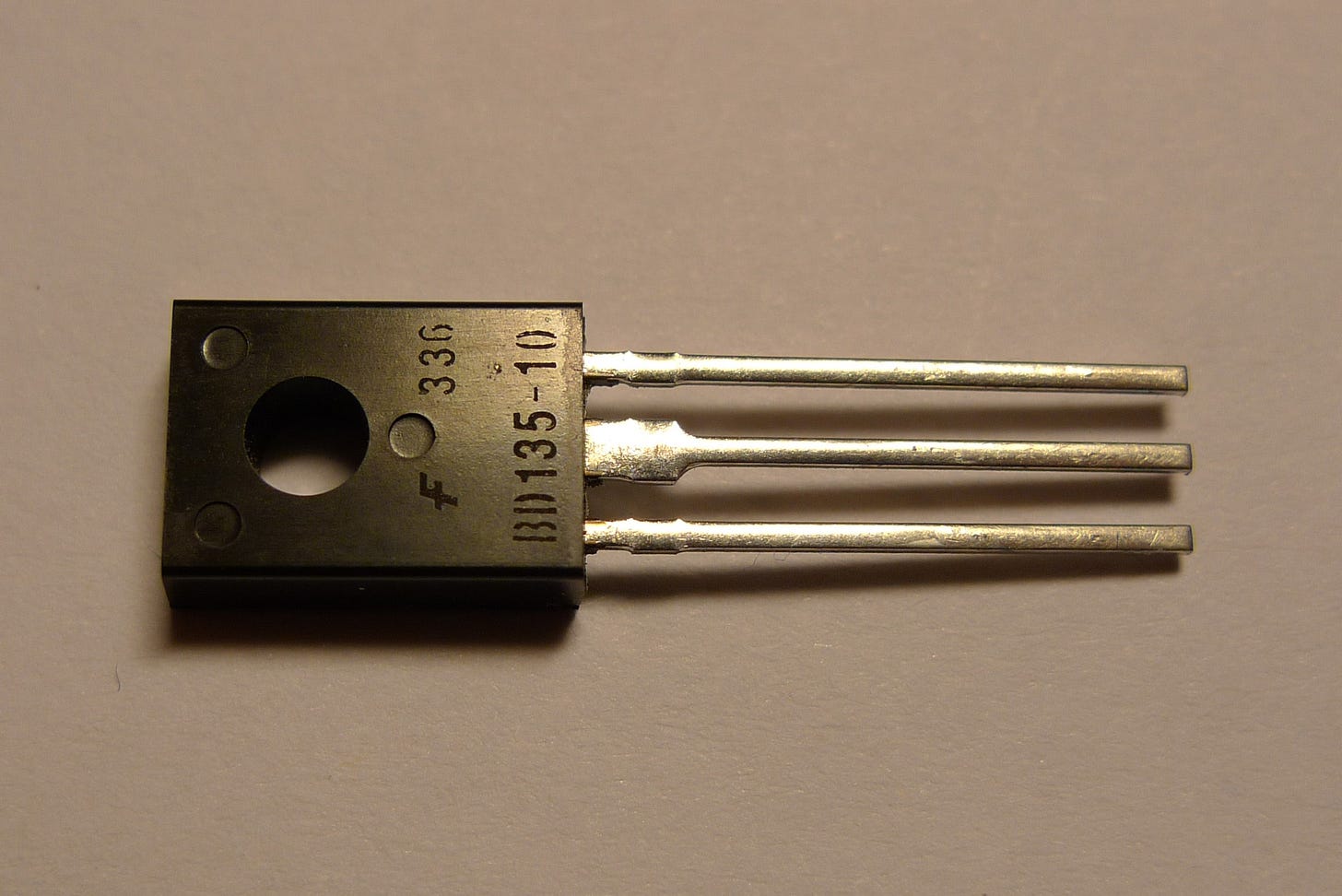

Quick pause: let's remember that a transistor is the basic building block of modern electronics for performing calculations. We usually see that the thing has a head with three legs:

When I say they have to become smaller, I mean sizes in nanometers. Take a meter and divide it into a billion parts, and that's a nanometer. Currently, microprocessors with smaller transistors achieve sizes of about 2-3 nanometers.

I won't delve into technicalities here, but as there's a lot of marketing involved, it's currently challenging to know the length of logic gates or the distance between transistors. Why? Not because it's a technological challenge in itself, but because the nomenclature is more based on strategic criteria than specific physical characteristics.

The point is that at such incredibly small scales, where the size of logic gates is comparable to the size of atoms, quantum effects become noticeable, and the transistor will behave more evidently following the laws of quantum physics, masking classical effects (just the opposite of what happens on larger scales).

This complicates things a lot.

🎲 "God does not play dice"

Despite Einstein saying that God does not play dice, we now know well that He does, that the quantum world is a world of probabilities, where you can never assert the behavior of an electron with 100% certainty.

The tunnel effect is a quantum phenomenon by which a particle violates the principles of classical mechanics (the one Einstein liked) and can make the particle pass through a potential barrier. The particle, which from a classical point of view does not have enough energy to move from one side to the other, when we are at atomic scales, has a certain probability of being on either side. This is because the spatial location of the particle at such small scales is a probability function of being in a place. The famous superposition of quantum states.

Let's say it's like throwing a tennis ball at a wall and bouncing it back (classical mechanics) or passing through it on some occasions (quantum mechanics). The first happens at a normal scale all the time, but when we go to such tiny scales, the second can happen. Note that you could also go through the wall in the classical world if you apply a lot of force to the ball; but in the quantum world, it wouldn't need as much energy.

That's the crux of the matter. And that's where quantum computing comes from.

The idea is to take advantage of this behavior - and some other very interesting properties - to continue performing calculations even if the laws of physics try to prevent us. Even if we can't precisely control the behavior of electrons in a transistor.

In essence, traditional computers (or the ones we know today) may have a limit, and we're very close to reaching it. But we can cross it thanks to quantum computing.

If classical computing has the bit as the minimum unit of computation (with states 0 or 1), quantum computing has the quantum bit... or qubit. It's called a cúbit in Spanish.

One of the most interesting properties of the qubit compared to the classical bit is the...

⚛️ Quantum State Superposition

Two states are said to be in superposition when a particle has a certain probability of being in each of them.

Remember Schrödinger's cat? The one that was in a box and could be alive and dead at the same time, but until we opened the box, we wouldn't know its state.

Or like the typical friend who always arrives late and when you ask, he always says he's on his way, but you know he has a certain probability of not having left his house yet or that he has, and this time he might be telling the truth! You would only know if you could measure it in some way: a camera, an exact GPS location...

Well, applied to bits. That is, a quantum bit, qubit... It can be in several states at once. It has a certain probability of being a 0 and another certain probability of being a 1.

This adds a parallelization of processing never before seen in a classical computer. It's as if we could try a lot of different possibilities with much fewer operations.

As you can imagine, quantum computing is a completely new field and very different from the current one. It requires specialized hardware (quantum computers) and also specialized software.

These new computers need new techniques and algorithms to make them work efficiently.

It represents a paradigm shift. A new era in computing.

And it has already been theoretically proven that there are algorithms for quantum computers that are much more powerful than the current classical ones, such as the Grover algorithm - for much more efficient database searching - or Shor's algorithm - for efficient prime number factorization, a feature on which current cybersecurity is based.

Grover's algorithm has a quadratic advantage over its classical counterpart, while Shor's has an exponential advantage. In short, the advancement of quantum computing is making algorithms solve increasingly complex problems in less time.

But now we're entering the realm of algorithmic complexity theory. Let's get back to the point.

Do you see where this is going? Much more efficient algorithms, processing parallelization... If we're talking about being able to solve problems with more combinatorics in less time, it means that these advances can directly impact the world of optimization.

It's not proven yet, but there are many efforts to find more efficient algorithms that solve optimization problems that are currently very difficult or impossible to solve.

Will it be achieved? No idea, but there's a certain probability that it will.

🧑🏻🔬 The scientific community is betting on it

The point is that a good part of the scientific community is dedicating itself wholeheartedly to finding those optimization algorithms.

One of the most active researchers and communicators is Amira Abbas, who recently shared a 70-page article signed by herself and 44 other researchers that precisely discusses the potential, challenges, and the path to developing quantum algorithms and properly comparing them with classical ones.

The first words of the article say (I won't change a single comma):

Recent advances in quantum computers are demonstrating the ability to solve problems on a scale beyond classical simulation by brute force. As a result, there has been widespread interest in quantum algorithms in many areas, with optimization being one of the most prominent domains.

Renowned researchers say it, and the industry has jumped on the bandwagon, of course. Major consulting firms like McKinsey were already talking in 2020 about the impact of quantum computing in different sectors, different use cases in 2021, or an introduction to quantum optimizers recently. As a summary, you can check their page on the rise of quantum computing.

BCG also talked in 2020 about use cases in finance (pure optimization, I'll tell you in advance) or how quantum computing is already being considered a feasible technology to solve current problems, many of them related to optimization. Feel free to check out their summary page.

All of this to tell you that yes, quantum computing has a lot to say in the world of optimization.

Many people are working on it, and a lot of money is on the table.

And all these people from other areas are newcomers to the world of optimization, bringing a new and intriguing perspective.

The best part is that all these people are expanding the boundaries of knowledge about optimization.

The best part is that now many more people are aware of Operations Research.

The best part is that the best is yet to come.

See you next week with the second part of this trilogy.

Until then,

Borja.